diff --git a/DOCSTRING_STYLE.md b/DOCSTRING_STYLE.md

new file mode 100644

index 000000000..77b6dc90a

--- /dev/null

+++ b/DOCSTRING_STYLE.md

@@ -0,0 +1,499 @@

+# DataJoint Python Docstring Style Guide

+

+This document defines the canonical docstring format for datajoint-python.

+All public APIs must follow this NumPy-style format for consistency and

+automated documentation generation via mkdocstrings.

+

+## Quick Reference

+

+```python

+def function(param1, param2, *, keyword_only=None):

+ """

+ Short one-line summary (imperative mood, no period).

+

+ Extended description providing context and details. May span

+ multiple lines. Explain what the function does, not how.

+

+ Parameters

+ ----------

+ param1 : type

+ Description of param1.

+ param2 : type

+ Description of param2.

+ keyword_only : type, optional

+ Description. Default is None.

+

+ Returns

+ -------

+ type

+ Description of return value.

+

+ Raises

+ ------

+ ExceptionType

+ When and why this exception is raised.

+

+ Examples

+ --------

+ >>> result = function("value", 42)

+ >>> print(result)

+ expected_output

+

+ See Also

+ --------

+ related_function : Brief description.

+

+ Notes

+ -----

+ Additional technical notes, algorithms, or implementation details.

+ """

+```

+

+---

+

+## Module Docstrings

+

+Every module must begin with a docstring explaining its purpose.

+

+```python

+"""

+Connection management for DataJoint.

+

+This module provides the Connection class that manages database connections,

+transaction handling, and query execution. It also provides the ``conn()``

+function for accessing a persistent shared connection.

+

+Key Components

+--------------

+Connection : class

+ Manages a single database connection with transaction support.

+conn : function

+ Returns a persistent connection object shared across modules.

+

+Example

+-------

+>>> import datajoint as dj

+>>> connection = dj.conn()

+>>> connection.query("SHOW DATABASES")

+"""

+```

+

+---

+

+## Class Docstrings

+

+```python

+class Table(QueryExpression):

+ """

+ Base class for all DataJoint tables.

+

+ Table implements data manipulation (insert, delete, update) and inherits

+ query functionality from QueryExpression. Concrete table classes must

+ define the ``definition`` property specifying the table structure.

+

+ Parameters

+ ----------

+ None

+ Tables are typically instantiated via schema decoration, not directly.

+

+ Attributes

+ ----------

+ definition : str

+ DataJoint table definition string (DDL).

+ primary_key : list of str

+ Names of primary key attributes.

+ heading : Heading

+ Table heading with attribute metadata.

+

+ Examples

+ --------

+ Define a table using the schema decorator:

+

+ >>> @schema

+ ... class Mouse(dj.Manual):

+ ... definition = '''

+ ... mouse_id : int

+ ... ---

+ ... dob : date

+ ... sex : enum("M", "F", "U")

+ ... '''

+

+ Insert data:

+

+ >>> Mouse.insert1({"mouse_id": 1, "dob": "2024-01-15", "sex": "M"})

+

+ See Also

+ --------

+ Manual : Table for manually entered data.

+ Computed : Table for computed results.

+ QueryExpression : Query operator base class.

+ """

+```

+

+---

+

+## Method Docstrings

+

+### Standard Method

+

+```python

+def insert(self, rows, *, replace=False, skip_duplicates=False, ignore_extra_fields=False):

+ """

+ Insert one or more rows into the table.

+

+ Parameters

+ ----------

+ rows : iterable

+ Rows to insert. Each row can be:

+ - dict: ``{"attr": value, ...}``

+ - numpy.void: Record array element

+ - sequence: Values in heading order

+ - QueryExpression: Results of a query

+ - pathlib.Path: Path to CSV file

+ replace : bool, optional

+ If True, replace existing rows with matching primary keys.

+ Default is False.

+ skip_duplicates : bool, optional

+ If True, silently skip rows that would cause duplicate key errors.

+ Default is False.

+ ignore_extra_fields : bool, optional

+ If True, ignore fields not in the table heading.

+ Default is False.

+

+ Returns

+ -------

+ None

+

+ Raises

+ ------

+ DuplicateError

+ When inserting a row with an existing primary key and neither

+ ``replace`` nor ``skip_duplicates`` is True.

+ DataJointError

+ When required attributes are missing or types are incompatible.

+

+ Examples

+ --------

+ Insert a single row:

+

+ >>> Mouse.insert1({"mouse_id": 1, "dob": "2024-01-15", "sex": "M"})

+

+ Insert multiple rows:

+

+ >>> Mouse.insert([

+ ... {"mouse_id": 2, "dob": "2024-02-01", "sex": "F"},

+ ... {"mouse_id": 3, "dob": "2024-02-15", "sex": "M"},

+ ... ])

+

+ Insert from a query:

+

+ >>> TargetTable.insert(SourceTable & "condition > 5")

+

+ See Also

+ --------

+ insert1 : Insert exactly one row.

+ """

+```

+

+### Method with Complex Return

+

+```python

+def fetch(self, *attrs, offset=None, limit=None, order_by=None, format=None, as_dict=False):

+ """

+ Retrieve data from the table.

+

+ Parameters

+ ----------

+ *attrs : str

+ Attribute names to fetch. If empty, fetches all attributes.

+ Use "KEY" to fetch primary key as dict.

+ offset : int, optional

+ Number of rows to skip. Default is None (no offset).

+ limit : int, optional

+ Maximum number of rows to return. Default is None (no limit).

+ order_by : str or list of str, optional

+ Attribute(s) to sort by. Use "KEY" for primary key order,

+ append " DESC" for descending. Default is None (unordered).

+ format : {"array", "frame"}, optional

+ Output format when fetching all attributes:

+ - "array": numpy structured array (default)

+ - "frame": pandas DataFrame

+ as_dict : bool, optional

+ If True, return list of dicts instead of structured array.

+ Default is False.

+

+ Returns

+ -------

+ numpy.ndarray or list of dict or pandas.DataFrame

+ Query results in the requested format:

+ - Single attribute: 1D array of values

+ - Multiple attributes: tuple of 1D arrays

+ - No attributes specified: structured array, DataFrame, or list of dicts

+

+ Examples

+ --------

+ Fetch all data as structured array:

+

+ >>> data = Mouse.fetch()

+

+ Fetch specific attributes:

+

+ >>> ids, dobs = Mouse.fetch("mouse_id", "dob")

+

+ Fetch as list of dicts:

+

+ >>> rows = Mouse.fetch(as_dict=True)

+ >>> for row in rows:

+ ... print(row["mouse_id"])

+

+ Fetch with ordering and limit:

+

+ >>> recent = Mouse.fetch(order_by="dob DESC", limit=10)

+

+ See Also

+ --------

+ fetch1 : Fetch exactly one row.

+ head : Fetch first N rows ordered by key.

+ tail : Fetch last N rows ordered by key.

+ """

+```

+

+### Generator Method

+

+```python

+def make(self, key):

+ """

+ Compute and insert results for one key.

+

+ This method must be implemented by subclasses of Computed or Imported

+ tables. It is called by ``populate()`` for each key in ``key_source``

+ that is not yet in the table.

+

+ The method can be implemented in two ways:

+

+ **Simple mode** (regular method):

+ Fetch, compute, and insert within a single transaction.

+

+ **Tripartite mode** (generator method):

+ Split into ``make_fetch``, ``make_compute``, ``make_insert`` for

+ long-running computations with deferred transactions.

+

+ Parameters

+ ----------

+ key : dict

+ Primary key values identifying the entity to compute.

+

+ Yields

+ ------

+ tuple

+ In tripartite mode, yields fetched data and computed results.

+

+ Raises

+ ------

+ NotImplementedError

+ If neither ``make`` nor the tripartite methods are implemented.

+

+ Examples

+ --------

+ Simple implementation:

+

+ >>> class ProcessedData(dj.Computed):

+ ... definition = '''

+ ... -> RawData

+ ... ---

+ ... result : float

+ ... '''

+ ...

+ ... def make(self, key):

+ ... raw = (RawData & key).fetch1("data")

+ ... result = expensive_computation(raw)

+ ... self.insert1({**key, "result": result})

+

+ See Also

+ --------

+ populate : Execute make for all pending keys.

+ key_source : Query defining keys to populate.

+ """

+```

+

+---

+

+## Property Docstrings

+

+```python

+@property

+def primary_key(self):

+ """

+ list of str : Names of primary key attributes.

+

+ The primary key uniquely identifies each row in the table.

+ Derived from the table definition.

+

+ Examples

+ --------

+ >>> Mouse.primary_key

+ ['mouse_id']

+ """

+ return self.heading.primary_key

+```

+

+---

+

+## Parameter Types

+

+Use these type annotations in docstrings:

+

+| Python Type | Docstring Format |

+|-------------|------------------|

+| `str` | `str` |

+| `int` | `int` |

+| `float` | `float` |

+| `bool` | `bool` |

+| `None` | `None` |

+| `list` | `list` or `list of str` |

+| `dict` | `dict` or `dict[str, int]` |

+| `tuple` | `tuple` or `tuple of (str, int)` |

+| Optional | `str or None` or `str, optional` |

+| Union | `str or int` |

+| Literal | `{"option1", "option2"}` |

+| Callable | `callable` |

+| Class | `ClassName` |

+| Any | `object` |

+

+---

+

+## Section Order

+

+Sections must appear in this order (include only relevant sections):

+

+1. **Short Summary** (required) - One line, imperative mood

+2. **Deprecation Warning** - If applicable

+3. **Extended Summary** - Additional context

+4. **Parameters** - Input arguments

+5. **Returns** / **Yields** - Output values

+6. **Raises** - Exceptions

+7. **Warns** - Warnings issued

+8. **See Also** - Related functions/classes

+9. **Notes** - Technical details

+10. **References** - Citations

+11. **Examples** (strongly encouraged) - Usage demonstrations

+

+---

+

+## Style Rules

+

+### Do

+

+- Use imperative mood: "Insert rows" not "Inserts rows"

+- Start with capital letter, no period at end of summary

+- Document all public methods

+- Include at least one example for public APIs

+- Use backticks for code: ``parameter``, ``ClassName``

+- Reference related items in See Also

+

+### Don't

+

+- Don't document private methods extensively (brief is fine)

+- Don't repeat the function signature in the description

+- Don't use "This function..." or "This method..."

+- Don't include implementation details in Parameters

+- Don't use first person ("I", "we")

+

+---

+

+## Examples Section Best Practices

+

+```python

+"""

+Examples

+--------

+Basic usage:

+

+>>> table.insert1({"id": 1, "value": 42})

+

+With options:

+

+>>> table.insert(rows, skip_duplicates=True)

+

+Error handling:

+

+>>> try:

+... table.insert1({"id": 1}) # duplicate

+... except dj.errors.DuplicateError:

+... print("Already exists")

+Already exists

+"""

+```

+

+---

+

+## Converting from Sphinx Style

+

+Replace Sphinx-style docstrings:

+

+```python

+# Before (Sphinx style)

+def method(self, param1, param2):

+ """

+ Brief description.

+

+ :param param1: Description of param1.

+ :type param1: str

+ :param param2: Description of param2.

+ :type param2: int

+ :returns: Description of return value.

+ :rtype: bool

+ :raises ValueError: When param1 is empty.

+ """

+

+# After (NumPy style)

+def method(self, param1, param2):

+ """

+ Brief description.

+

+ Parameters

+ ----------

+ param1 : str

+ Description of param1.

+ param2 : int

+ Description of param2.

+

+ Returns

+ -------

+ bool

+ Description of return value.

+

+ Raises

+ ------

+ ValueError

+ When param1 is empty.

+ """

+```

+

+---

+

+## Validation

+

+Docstrings are validated by:

+

+1. **mkdocstrings** - Parses for API documentation

+2. **ruff** - Linting (D100-D417 rules when enabled)

+3. **pytest --doctest-modules** - Executes examples

+

+Run locally:

+

+```bash

+# Build docs to check parsing

+mkdocs build --config-file docs/mkdocs.yaml

+

+# Check docstring examples

+pytest --doctest-modules src/datajoint/

+```

+

+---

+

+## References

+

+- [NumPy Docstring Guide](https://numpydoc.readthedocs.io/en/latest/format.html)

+- [mkdocstrings Python Handler](https://mkdocstrings.github.io/python/)

+- [PEP 257 - Docstring Conventions](https://peps.python.org/pep-0257/)

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 000000000..3f8b99424

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,190 @@

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to the Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ Copyright 2014-2026 DataJoint Inc. and contributors

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

diff --git a/LICENSE.txt b/LICENSE.txt

deleted file mode 100644

index 90f4edaaa..000000000

--- a/LICENSE.txt

+++ /dev/null

@@ -1,504 +0,0 @@

- GNU LESSER GENERAL PUBLIC LICENSE

- Version 2.1, February 1999

-

- Copyright (C) 1991, 1999 Free Software Foundation, Inc.

- 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA

- Everyone is permitted to copy and distribute verbatim copies

- of this license document, but changing it is not allowed.

-

-(This is the first released version of the Lesser GPL. It also counts

- as the successor of the GNU Library Public License, version 2, hence

- the version number 2.1.)

-

- Preamble

-

- The licenses for most software are designed to take away your

-freedom to share and change it. By contrast, the GNU General Public

-Licenses are intended to guarantee your freedom to share and change

-free software--to make sure the software is free for all its users.

-

- This license, the Lesser General Public License, applies to some

-specially designated software packages--typically libraries--of the

-Free Software Foundation and other authors who decide to use it. You

-can use it too, but we suggest you first think carefully about whether

-this license or the ordinary General Public License is the better

-strategy to use in any particular case, based on the explanations below.

-

- When we speak of free software, we are referring to freedom of use,

-not price. Our General Public Licenses are designed to make sure that

-you have the freedom to distribute copies of free software (and charge

-for this service if you wish); that you receive source code or can get

-it if you want it; that you can change the software and use pieces of

-it in new free programs; and that you are informed that you can do

-these things.

-

- To protect your rights, we need to make restrictions that forbid

-distributors to deny you these rights or to ask you to surrender these

-rights. These restrictions translate to certain responsibilities for

-you if you distribute copies of the library or if you modify it.

-

- For example, if you distribute copies of the library, whether gratis

-or for a fee, you must give the recipients all the rights that we gave

-you. You must make sure that they, too, receive or can get the source

-code. If you link other code with the library, you must provide

-complete object files to the recipients, so that they can relink them

-with the library after making changes to the library and recompiling

-it. And you must show them these terms so they know their rights.

-

- We protect your rights with a two-step method: (1) we copyright the

-library, and (2) we offer you this license, which gives you legal

-permission to copy, distribute and/or modify the library.

-

- To protect each distributor, we want to make it very clear that

-there is no warranty for the free library. Also, if the library is

-modified by someone else and passed on, the recipients should know

-that what they have is not the original version, so that the original

-author's reputation will not be affected by problems that might be

-introduced by others.

-

- Finally, software patents pose a constant threat to the existence of

-any free program. We wish to make sure that a company cannot

-effectively restrict the users of a free program by obtaining a

-restrictive license from a patent holder. Therefore, we insist that

-any patent license obtained for a version of the library must be

-consistent with the full freedom of use specified in this license.

-

- Most GNU software, including some libraries, is covered by the

-ordinary GNU General Public License. This license, the GNU Lesser

-General Public License, applies to certain designated libraries, and

-is quite different from the ordinary General Public License. We use

-this license for certain libraries in order to permit linking those

-libraries into non-free programs.

-

- When a program is linked with a library, whether statically or using

-a shared library, the combination of the two is legally speaking a

-combined work, a derivative of the original library. The ordinary

-General Public License therefore permits such linking only if the

-entire combination fits its criteria of freedom. The Lesser General

-Public License permits more lax criteria for linking other code with

-the library.

-

- We call this license the "Lesser" General Public License because it

-does Less to protect the user's freedom than the ordinary General

-Public License. It also provides other free software developers Less

-of an advantage over competing non-free programs. These disadvantages

-are the reason we use the ordinary General Public License for many

-libraries. However, the Lesser license provides advantages in certain

-special circumstances.

-

- For example, on rare occasions, there may be a special need to

-encourage the widest possible use of a certain library, so that it becomes

-a de-facto standard. To achieve this, non-free programs must be

-allowed to use the library. A more frequent case is that a free

-library does the same job as widely used non-free libraries. In this

-case, there is little to gain by limiting the free library to free

-software only, so we use the Lesser General Public License.

-

- In other cases, permission to use a particular library in non-free

-programs enables a greater number of people to use a large body of

-free software. For example, permission to use the GNU C Library in

-non-free programs enables many more people to use the whole GNU

-operating system, as well as its variant, the GNU/Linux operating

-system.

-

- Although the Lesser General Public License is Less protective of the

-users' freedom, it does ensure that the user of a program that is

-linked with the Library has the freedom and the wherewithal to run

-that program using a modified version of the Library.

-

- The precise terms and conditions for copying, distribution and

-modification follow. Pay close attention to the difference between a

-"work based on the library" and a "work that uses the library". The

-former contains code derived from the library, whereas the latter must

-be combined with the library in order to run.

-

- GNU LESSER GENERAL PUBLIC LICENSE

- TERMS AND CONDITIONS FOR COPYING, DISTRIBUTION AND MODIFICATION

-

- 0. This License Agreement applies to any software library or other

-program which contains a notice placed by the copyright holder or

-other authorized party saying it may be distributed under the terms of

-this Lesser General Public License (also called "this License").

-Each licensee is addressed as "you".

-

- A "library" means a collection of software functions and/or data

-prepared so as to be conveniently linked with application programs

-(which use some of those functions and data) to form executables.

-

- The "Library", below, refers to any such software library or work

-which has been distributed under these terms. A "work based on the

-Library" means either the Library or any derivative work under

-copyright law: that is to say, a work containing the Library or a

-portion of it, either verbatim or with modifications and/or translated

-straightforwardly into another language. (Hereinafter, translation is

-included without limitation in the term "modification".)

-

- "Source code" for a work means the preferred form of the work for

-making modifications to it. For a library, complete source code means

-all the source code for all modules it contains, plus any associated

-interface definition files, plus the scripts used to control compilation

-and installation of the library.

-

- Activities other than copying, distribution and modification are not

-covered by this License; they are outside its scope. The act of

-running a program using the Library is not restricted, and output from

-such a program is covered only if its contents constitute a work based

-on the Library (independent of the use of the Library in a tool for

-writing it). Whether that is true depends on what the Library does

-and what the program that uses the Library does.

-

- 1. You may copy and distribute verbatim copies of the Library's

-complete source code as you receive it, in any medium, provided that

-you conspicuously and appropriately publish on each copy an

-appropriate copyright notice and disclaimer of warranty; keep intact

-all the notices that refer to this License and to the absence of any

-warranty; and distribute a copy of this License along with the

-Library.

-

- You may charge a fee for the physical act of transferring a copy,

-and you may at your option offer warranty protection in exchange for a

-fee.

-

- 2. You may modify your copy or copies of the Library or any portion

-of it, thus forming a work based on the Library, and copy and

-distribute such modifications or work under the terms of Section 1

-above, provided that you also meet all of these conditions:

-

- a) The modified work must itself be a software library.

-

- b) You must cause the files modified to carry prominent notices

- stating that you changed the files and the date of any change.

-

- c) You must cause the whole of the work to be licensed at no

- charge to all third parties under the terms of this License.

-

- d) If a facility in the modified Library refers to a function or a

- table of data to be supplied by an application program that uses

- the facility, other than as an argument passed when the facility

- is invoked, then you must make a good faith effort to ensure that,

- in the event an application does not supply such function or

- table, the facility still operates, and performs whatever part of

- its purpose remains meaningful.

-

- (For example, a function in a library to compute square roots has

- a purpose that is entirely well-defined independent of the

- application. Therefore, Subsection 2d requires that any

- application-supplied function or table used by this function must

- be optional: if the application does not supply it, the square

- root function must still compute square roots.)

-

-These requirements apply to the modified work as a whole. If

-identifiable sections of that work are not derived from the Library,

-and can be reasonably considered independent and separate works in

-themselves, then this License, and its terms, do not apply to those

-sections when you distribute them as separate works. But when you

-distribute the same sections as part of a whole which is a work based

-on the Library, the distribution of the whole must be on the terms of

-this License, whose permissions for other licensees extend to the

-entire whole, and thus to each and every part regardless of who wrote

-it.

-

-Thus, it is not the intent of this section to claim rights or contest

-your rights to work written entirely by you; rather, the intent is to

-exercise the right to control the distribution of derivative or

-collective works based on the Library.

-

-In addition, mere aggregation of another work not based on the Library

-with the Library (or with a work based on the Library) on a volume of

-a storage or distribution medium does not bring the other work under

-the scope of this License.

-

- 3. You may opt to apply the terms of the ordinary GNU General Public

-License instead of this License to a given copy of the Library. To do

-this, you must alter all the notices that refer to this License, so

-that they refer to the ordinary GNU General Public License, version 2,

-instead of to this License. (If a newer version than version 2 of the

-ordinary GNU General Public License has appeared, then you can specify

-that version instead if you wish.) Do not make any other change in

-these notices.

-

- Once this change is made in a given copy, it is irreversible for

-that copy, so the ordinary GNU General Public License applies to all

-subsequent copies and derivative works made from that copy.

-

- This option is useful when you wish to copy part of the code of

-the Library into a program that is not a library.

-

- 4. You may copy and distribute the Library (or a portion or

-derivative of it, under Section 2) in object code or executable form

-under the terms of Sections 1 and 2 above provided that you accompany

-it with the complete corresponding machine-readable source code, which

-must be distributed under the terms of Sections 1 and 2 above on a

-medium customarily used for software interchange.

-

- If distribution of object code is made by offering access to copy

-from a designated place, then offering equivalent access to copy the

-source code from the same place satisfies the requirement to

-distribute the source code, even though third parties are not

-compelled to copy the source along with the object code.

-

- 5. A program that contains no derivative of any portion of the

-Library, but is designed to work with the Library by being compiled or

-linked with it, is called a "work that uses the Library". Such a

-work, in isolation, is not a derivative work of the Library, and

-therefore falls outside the scope of this License.

-

- However, linking a "work that uses the Library" with the Library

-creates an executable that is a derivative of the Library (because it

-contains portions of the Library), rather than a "work that uses the

-library". The executable is therefore covered by this License.

-Section 6 states terms for distribution of such executables.

-

- When a "work that uses the Library" uses material from a header file

-that is part of the Library, the object code for the work may be a

-derivative work of the Library even though the source code is not.

-Whether this is true is especially significant if the work can be

-linked without the Library, or if the work is itself a library. The

-threshold for this to be true is not precisely defined by law.

-

- If such an object file uses only numerical parameters, data

-structure layouts and accessors, and small macros and small inline

-functions (ten lines or less in length), then the use of the object

-file is unrestricted, regardless of whether it is legally a derivative

-work. (Executables containing this object code plus portions of the

-Library will still fall under Section 6.)

-

- Otherwise, if the work is a derivative of the Library, you may

-distribute the object code for the work under the terms of Section 6.

-Any executables containing that work also fall under Section 6,

-whether or not they are linked directly with the Library itself.

-

- 6. As an exception to the Sections above, you may also combine or

-link a "work that uses the Library" with the Library to produce a

-work containing portions of the Library, and distribute that work

-under terms of your choice, provided that the terms permit

-modification of the work for the customer's own use and reverse

-engineering for debugging such modifications.

-

- You must give prominent notice with each copy of the work that the

-Library is used in it and that the Library and its use are covered by

-this License. You must supply a copy of this License. If the work

-during execution displays copyright notices, you must include the

-copyright notice for the Library among them, as well as a reference

-directing the user to the copy of this License. Also, you must do one

-of these things:

-

- a) Accompany the work with the complete corresponding

- machine-readable source code for the Library including whatever

- changes were used in the work (which must be distributed under

- Sections 1 and 2 above); and, if the work is an executable linked

- with the Library, with the complete machine-readable "work that

- uses the Library", as object code and/or source code, so that the

- user can modify the Library and then relink to produce a modified

- executable containing the modified Library. (It is understood

- that the user who changes the contents of definitions files in the

- Library will not necessarily be able to recompile the application

- to use the modified definitions.)

-

- b) Use a suitable shared library mechanism for linking with the

- Library. A suitable mechanism is one that (1) uses at run time a

- copy of the library already present on the user's computer system,

- rather than copying library functions into the executable, and (2)

- will operate properly with a modified version of the library, if

- the user installs one, as long as the modified version is

- interface-compatible with the version that the work was made with.

-

- c) Accompany the work with a written offer, valid for at

- least three years, to give the same user the materials

- specified in Subsection 6a, above, for a charge no more

- than the cost of performing this distribution.

-

- d) If distribution of the work is made by offering access to copy

- from a designated place, offer equivalent access to copy the above

- specified materials from the same place.

-

- e) Verify that the user has already received a copy of these

- materials or that you have already sent this user a copy.

-

- For an executable, the required form of the "work that uses the

-Library" must include any data and utility programs needed for

-reproducing the executable from it. However, as a special exception,

-the materials to be distributed need not include anything that is

-normally distributed (in either source or binary form) with the major

-components (compiler, kernel, and so on) of the operating system on

-which the executable runs, unless that component itself accompanies

-the executable.

-

- It may happen that this requirement contradicts the license

-restrictions of other proprietary libraries that do not normally

-accompany the operating system. Such a contradiction means you cannot

-use both them and the Library together in an executable that you

-distribute.

-

- 7. You may place library facilities that are a work based on the

-Library side-by-side in a single library together with other library

-facilities not covered by this License, and distribute such a combined

-library, provided that the separate distribution of the work based on

-the Library and of the other library facilities is otherwise

-permitted, and provided that you do these two things:

-

- a) Accompany the combined library with a copy of the same work

- based on the Library, uncombined with any other library

- facilities. This must be distributed under the terms of the

- Sections above.

-

- b) Give prominent notice with the combined library of the fact

- that part of it is a work based on the Library, and explaining

- where to find the accompanying uncombined form of the same work.

-

- 8. You may not copy, modify, sublicense, link with, or distribute

-the Library except as expressly provided under this License. Any

-attempt otherwise to copy, modify, sublicense, link with, or

-distribute the Library is void, and will automatically terminate your

-rights under this License. However, parties who have received copies,

-or rights, from you under this License will not have their licenses

-terminated so long as such parties remain in full compliance.

-

- 9. You are not required to accept this License, since you have not

-signed it. However, nothing else grants you permission to modify or

-distribute the Library or its derivative works. These actions are

-prohibited by law if you do not accept this License. Therefore, by

-modifying or distributing the Library (or any work based on the

-Library), you indicate your acceptance of this License to do so, and

-all its terms and conditions for copying, distributing or modifying

-the Library or works based on it.

-

- 10. Each time you redistribute the Library (or any work based on the

-Library), the recipient automatically receives a license from the

-original licensor to copy, distribute, link with or modify the Library

-subject to these terms and conditions. You may not impose any further

-restrictions on the recipients' exercise of the rights granted herein.

-You are not responsible for enforcing compliance by third parties with

-this License.

-

- 11. If, as a consequence of a court judgment or allegation of patent

-infringement or for any other reason (not limited to patent issues),

-conditions are imposed on you (whether by court order, agreement or

-otherwise) that contradict the conditions of this License, they do not

-excuse you from the conditions of this License. If you cannot

-distribute so as to satisfy simultaneously your obligations under this

-License and any other pertinent obligations, then as a consequence you

-may not distribute the Library at all. For example, if a patent

-license would not permit royalty-free redistribution of the Library by

-all those who receive copies directly or indirectly through you, then

-the only way you could satisfy both it and this License would be to

-refrain entirely from distribution of the Library.

-

-If any portion of this section is held invalid or unenforceable under any

-particular circumstance, the balance of the section is intended to apply,

-and the section as a whole is intended to apply in other circumstances.

-

-It is not the purpose of this section to induce you to infringe any

-patents or other property right claims or to contest validity of any

-such claims; this section has the sole purpose of protecting the

-integrity of the free software distribution system which is

-implemented by public license practices. Many people have made

-generous contributions to the wide range of software distributed

-through that system in reliance on consistent application of that

-system; it is up to the author/donor to decide if he or she is willing

-to distribute software through any other system and a licensee cannot

-impose that choice.

-

-This section is intended to make thoroughly clear what is believed to

-be a consequence of the rest of this License.

-

- 12. If the distribution and/or use of the Library is restricted in

-certain countries either by patents or by copyrighted interfaces, the

-original copyright holder who places the Library under this License may add

-an explicit geographical distribution limitation excluding those countries,

-so that distribution is permitted only in or among countries not thus

-excluded. In such case, this License incorporates the limitation as if

-written in the body of this License.

-

- 13. The Free Software Foundation may publish revised and/or new

-versions of the Lesser General Public License from time to time.

-Such new versions will be similar in spirit to the present version,

-but may differ in detail to address new problems or concerns.

-

-Each version is given a distinguishing version number. If the Library

-specifies a version number of this License which applies to it and

-"any later version", you have the option of following the terms and

-conditions either of that version or of any later version published by

-the Free Software Foundation. If the Library does not specify a

-license version number, you may choose any version ever published by

-the Free Software Foundation.

-

- 14. If you wish to incorporate parts of the Library into other free

-programs whose distribution conditions are incompatible with these,

-write to the author to ask for permission. For software which is

-copyrighted by the Free Software Foundation, write to the Free

-Software Foundation; we sometimes make exceptions for this. Our

-decision will be guided by the two goals of preserving the free status

-of all derivatives of our free software and of promoting the sharing

-and reuse of software generally.

-

- NO WARRANTY

-

- 15. BECAUSE THE LIBRARY IS LICENSED FREE OF CHARGE, THERE IS NO

-WARRANTY FOR THE LIBRARY, TO THE EXTENT PERMITTED BY APPLICABLE LAW.

-EXCEPT WHEN OTHERWISE STATED IN WRITING THE COPYRIGHT HOLDERS AND/OR

-OTHER PARTIES PROVIDE THE LIBRARY "AS IS" WITHOUT WARRANTY OF ANY

-KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE

-IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

-PURPOSE. THE ENTIRE RISK AS TO THE QUALITY AND PERFORMANCE OF THE

-LIBRARY IS WITH YOU. SHOULD THE LIBRARY PROVE DEFECTIVE, YOU ASSUME

-THE COST OF ALL NECESSARY SERVICING, REPAIR OR CORRECTION.

-

- 16. IN NO EVENT UNLESS REQUIRED BY APPLICABLE LAW OR AGREED TO IN

-WRITING WILL ANY COPYRIGHT HOLDER, OR ANY OTHER PARTY WHO MAY MODIFY

-AND/OR REDISTRIBUTE THE LIBRARY AS PERMITTED ABOVE, BE LIABLE TO YOU

-FOR DAMAGES, INCLUDING ANY GENERAL, SPECIAL, INCIDENTAL OR

-CONSEQUENTIAL DAMAGES ARISING OUT OF THE USE OR INABILITY TO USE THE

-LIBRARY (INCLUDING BUT NOT LIMITED TO LOSS OF DATA OR DATA BEING

-RENDERED INACCURATE OR LOSSES SUSTAINED BY YOU OR THIRD PARTIES OR A

-FAILURE OF THE LIBRARY TO OPERATE WITH ANY OTHER SOFTWARE), EVEN IF

-SUCH HOLDER OR OTHER PARTY HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH

-DAMAGES.

-

- END OF TERMS AND CONDITIONS

-

- How to Apply These Terms to Your New Libraries

-

- If you develop a new library, and you want it to be of the greatest

-possible use to the public, we recommend making it free software that

-everyone can redistribute and change. You can do so by permitting

-redistribution under these terms (or, alternatively, under the terms of the

-ordinary General Public License).

-

- To apply these terms, attach the following notices to the library. It is

-safest to attach them to the start of each source file to most effectively

-convey the exclusion of warranty; and each file should have at least the

-"copyright" line and a pointer to where the full notice is found.

-

- {description}

- Copyright (C) {year} {fullname}

-

- This library is free software; you can redistribute it and/or

- modify it under the terms of the GNU Lesser General Public

- License as published by the Free Software Foundation; either

- version 2.1 of the License, or (at your option) any later version.

-

- This library is distributed in the hope that it will be useful,

- but WITHOUT ANY WARRANTY; without even the implied warranty of

- MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

- Lesser General Public License for more details.

-

- You should have received a copy of the GNU Lesser General Public

- License along with this library; if not, write to the Free Software

- Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301

- USA

-

-Also add information on how to contact you by electronic and paper mail.

-

-You should also get your employer (if you work as a programmer) or your

-school, if any, to sign a "copyright disclaimer" for the library, if

-necessary. Here is a sample; alter the names:

-

- Yoyodyne, Inc., hereby disclaims all copyright interest in the

- library `Frob' (a library for tweaking knobs) written by James Random

- Hacker.

-

- {signature of Ty Coon}, 1 April 1990

- Ty Coon, President of Vice

-

-That's all there is to it!

diff --git a/README.md b/README.md

index f4a6f8352..85c3269e7 100644

--- a/README.md

+++ b/README.md

@@ -1,116 +1,69 @@

-# Welcome to DataJoint for Python!

+# DataJoint for Python

+

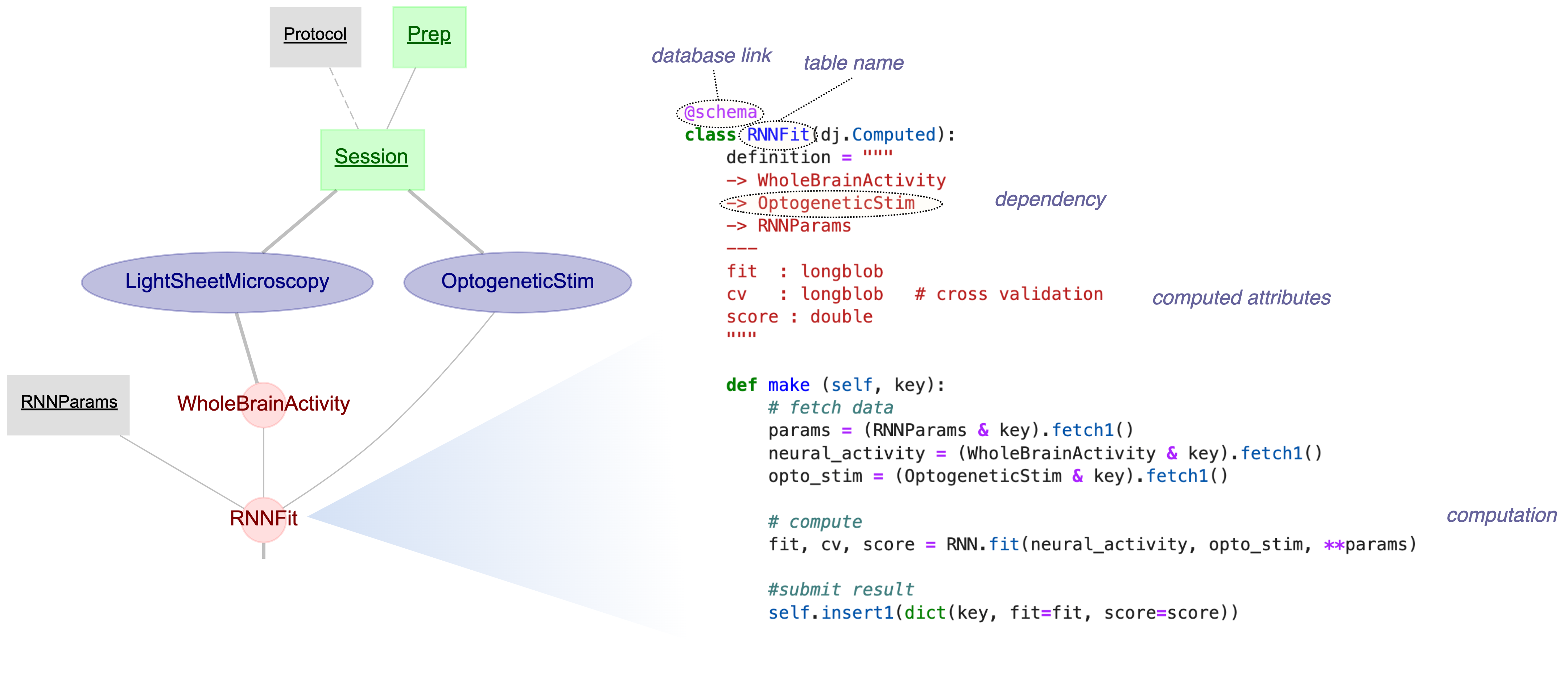

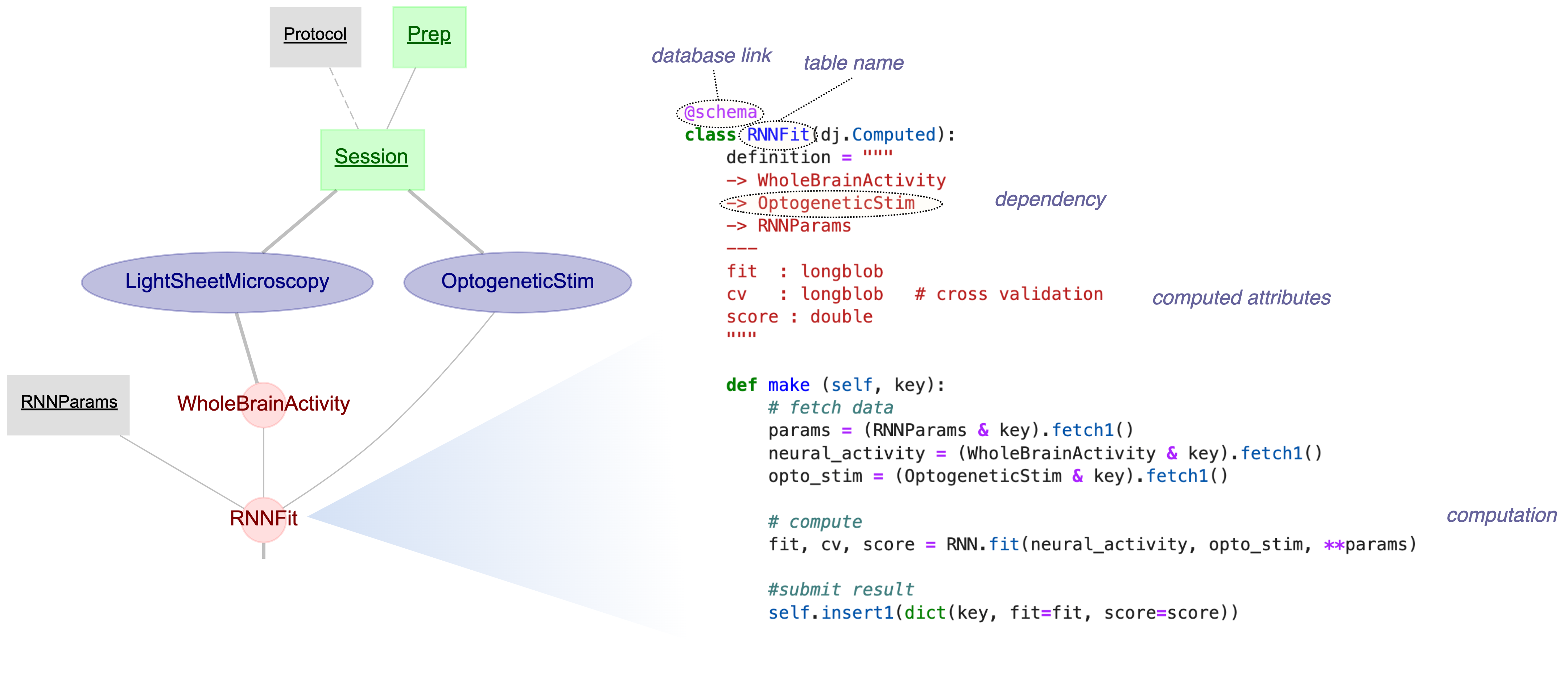

+DataJoint is a framework for scientific data pipelines that introduces the **Relational Workflow Model**—a paradigm where your database schema is an executable specification of your workflow.

+

+Traditional databases store data but don't understand how it was computed. DataJoint extends relational databases with native workflow semantics:

+

+- **Tables represent workflow steps** — Each table is a step in your pipeline where entities are created

+- **Foreign keys encode dependencies** — Parent tables must be populated before child tables

+- **Computations are declarative** — Define *what* to compute; DataJoint determines *when* and tracks *what's done*

+- **Results are immutable** — Computed results preserve full provenance and reproducibility

+

+### Object-Augmented Schemas

+

+Scientific data includes both structured metadata and large data objects (time series, images, movies, neural recordings, gene sequences). DataJoint solves this with **Object-Augmented Schemas (OAS)**—a unified architecture where relational tables and object storage are managed as one system with identical guarantees for integrity, transactions, and lifecycle.

+

+### DataJoint 2.0

+

+**DataJoint 2.0** solidifies these core concepts with a modernized API, improved type system, and enhanced object storage integration. Existing users can refer to the [Migration Guide](https://docs.datajoint.com/migration/) for upgrading from earlier versions.

+

+**Documentation:** https://docs.datajoint.com

-DataJoint for Python is a framework for scientific workflow management based on

-relational principles. DataJoint is built on the foundation of the relational data

-model and prescribes a consistent method for organizing, populating, computing, and

-querying data.

-

-DataJoint was initially developed in 2009 by Dimitri Yatsenko in Andreas Tolias' Lab at

-Baylor College of Medicine for the distributed processing and management of large

-volumes of data streaming from regular experiments. Starting in 2011, DataJoint has

-been available as an open-source project adopted by other labs and improved through

-contributions from several developers.

-Presently, the primary developer of DataJoint open-source software is the company

-DataJoint ().

-

## Data Pipeline Example

diff --git a/docs/mkdocs.yaml b/docs/mkdocs.yaml

index 03c10f69b..db2ea16f9 100644

--- a/docs/mkdocs.yaml

+++ b/docs/mkdocs.yaml

@@ -1,82 +1,16 @@

# ---------------------- PROJECT SPECIFIC ---------------------------

-site_name: DataJoint Documentation

+site_name: DataJoint Python - Developer Documentation

+site_description: Developer documentation for DataJoint Python contributors

repo_url: https://github.com/datajoint/datajoint-python

repo_name: datajoint/datajoint-python

nav:

- - DataJoint Python: index.md

- - Quick Start Guide: quick-start.md

- - Concepts:

- - Principles: concepts/principles.md

- - Data Model: concepts/data-model.md

- - Data Pipelines: concepts/data-pipelines.md

- - Teamwork: concepts/teamwork.md

- - Terminology: concepts/terminology.md

- - System Administration:

- - Database Administration: sysadmin/database-admin.md

- - Bulk Storage Systems: sysadmin/bulk-storage.md

- - External Store: sysadmin/external-store.md

- - Client Configuration:

- - Install: client/install.md

- - Credentials: client/credentials.md

- - Settings: client/settings.md

- - File Stores: client/stores.md

- - Schema Design:

- - Schema Creation: design/schema.md

- - Table Definition:

- - Table Tiers: design/tables/tiers.md

- - Declaration Syntax: design/tables/declare.md

- - Primary Key: design/tables/primary.md

- - Attributes: design/tables/attributes.md

- - Lookup Tables: design/tables/lookup.md

- - Manual Tables: design/tables/manual.md

- - Blobs: design/tables/blobs.md

- - Attachments: design/tables/attach.md

- - Filepaths: design/tables/filepath.md

- - Custom Codecs: design/tables/codecs.md

- - Dependencies: design/tables/dependencies.md

- - Indexes: design/tables/indexes.md

- - Master-Part Relationships: design/tables/master-part.md

- - Schema Diagrams: design/diagrams.md

- - Entity Normalization: design/normalization.md

- - Data Integrity: design/integrity.md

- - Schema Recall: design/recall.md

- - Schema Drop: design/drop.md

- - Schema Modification: design/alter.md

- - Data Manipulations:

- - manipulation/index.md

- - Insert: manipulation/insert.md

- - Delete: manipulation/delete.md

- - Update: manipulation/update.md

- - Transactions: manipulation/transactions.md

- - Data Queries:

- - Principles: query/principles.md

- - Example Schema: query/example-schema.md

- - Fetch: query/fetch.md

- - Iteration: query/iteration.md

- - Operators: query/operators.md

- - Restrict: query/restrict.md

- - Projection: query/project.md

- - Join: query/join.md

- - Aggregation: query/aggregation.md

- - Union: query/union.md

- - Universal Sets: query/universals.md

- - Query Caching: query/query-caching.md

- - Computations:

- - Make Method: compute/make.md

- - Populate: compute/populate.md

- - Key Source: compute/key-source.md

- - Distributed Computing: compute/distributed.md

- - Publish Data: publish-data.md

- - Internals:

- - SQL Transpilation: internal/transpilation.md

- - Tutorials:

- - JSON Datatype: tutorials/json.ipynb

- - FAQ: faq.md

- - Developer Guide: develop.md

- - Citation: citation.md

- - Changelog: changelog.md

- - API: api/ # defer to gen-files + literate-nav

+ - Home: index.md

+ - Contributing: develop.md

+ - Architecture:

+ - architecture/index.md

+ - SQL Transpilation: architecture/transpilation.md

+ - API Reference: api/ # defer to gen-files + literate-nav

# ---------------------------- STANDARD -----------------------------

@@ -93,7 +27,7 @@ theme:

favicon: assets/images/company-logo-blue.png

features:

- toc.integrate

- - content.code.annotate # Add codeblock annotations

+ - content.code.annotate

palette:

- media: "(prefers-color-scheme: light)"

scheme: datajoint

@@ -113,26 +47,18 @@ plugins:

handlers:

python:

paths:

- - "."

- - /main/

+ - "../src"

options:

- filters:

- - "!^_"

- docstring_style: sphinx # Replaces google default pending docstring updates

+ docstring_style: numpy

members_order: source

group_by_category: false

line_length: 88

+ show_source: false

- gen-files:

scripts:

- ./src/api/make_pages.py

- literate-nav:

nav_file: navigation.md

- - exclude-search:

- exclude:

- - "*/navigation.md"

- - "*/archive/*md"

- - mkdocs-jupyter:

- include: ["*.ipynb"]

- section-index

markdown_extensions:

- attr_list

@@ -154,41 +80,23 @@ markdown_extensions:

- name: mermaid

class: mermaid

format: !!python/name:pymdownx.superfences.fence_code_format

- - pymdownx.magiclink # Displays bare URLs as links

- - pymdownx.tasklist: # Renders check boxes in tasks lists

+ - pymdownx.magiclink

+ - pymdownx.tasklist:

custom_checkbox: true

- md_in_html

extra:

- generator: false # Disable watermark

+ generator: false

version:

provider: mike

social:

- icon: main/company-logo

link: https://www.datajoint.com

name: DataJoint

- - icon: fontawesome/brands/slack

- link: https://datajoint.slack.com

- name: Slack

- - icon: fontawesome/brands/linkedin

- link: https://www.linkedin.com/company/datajoint

- name: LinkedIn

- - icon: fontawesome/brands/twitter

- link: https://twitter.com/datajoint

- name: Twitter

- icon: fontawesome/brands/github

link: https://github.com/datajoint

name: GitHub

- - icon: fontawesome/brands/docker

- link: https://hub.docker.com/u/datajoint

- name: DockerHub

- - icon: fontawesome/brands/python

- link: https://pypi.org/user/datajointbot

- name: PyPI

- - icon: fontawesome/brands/stack-overflow

- link: https://stackoverflow.com/questions/tagged/datajoint

- name: StackOverflow

- - icon: fontawesome/brands/youtube

- link: https://www.youtube.com/channel/UCdeCuFOTCXlVMRzh6Wk-lGg

- name: YouTube

+ - icon: fontawesome/brands/slack

+ link: https://datajoint.slack.com

+ name: Slack

extra_css:

- assets/stylesheets/extra.css

diff --git a/docs/src/architecture/index.md b/docs/src/architecture/index.md

new file mode 100644

index 000000000..953fd7962

--- /dev/null

+++ b/docs/src/architecture/index.md

@@ -0,0 +1,34 @@

+# Architecture

+

+Internal design documentation for DataJoint developers.

+

+## Query System

+

+- [SQL Transpilation](transpilation.md) — How DataJoint translates query expressions to SQL

+

+## Design Principles

+

+DataJoint's architecture follows several key principles:

+

+1. **Immutable Query Expressions** — Query expressions are immutable; operators create new objects

+2. **Lazy Evaluation** — Queries are not executed until data is fetched

+3. **Query Optimization** — Unnecessary attributes are projected out before execution

+4. **Semantic Matching** — Joins use lineage-based attribute matching

+

+## Module Overview

+

+| Module | Purpose |

+|--------|---------|

+| `expression.py` | QueryExpression base class and operators |

+| `table.py` | Table class with data manipulation |

+| `fetch.py` | Data retrieval implementation |

+| `declare.py` | Table definition parsing |

+| `heading.py` | Attribute and heading management |

+| `blob.py` | Blob serialization |

+| `codecs.py` | Type codec system |

+| `connection.py` | Database connection management |

+| `schemas.py` | Schema binding and activation |

+

+## Contributing

+

+See the [Contributing Guide](../develop.md) for development setup instructions.

diff --git a/docs/src/internal/transpilation.md b/docs/src/architecture/transpilation.md

similarity index 100%

rename from docs/src/internal/transpilation.md

rename to docs/src/architecture/transpilation.md

diff --git a/docs/src/citation.md b/docs/src/archive/citation.md

similarity index 100%

rename from docs/src/citation.md

rename to docs/src/archive/citation.md

diff --git a/docs/src/client/credentials.md b/docs/src/archive/client/credentials.md

similarity index 100%

rename from docs/src/client/credentials.md

rename to docs/src/archive/client/credentials.md

diff --git a/docs/src/client/install.md b/docs/src/archive/client/install.md

similarity index 100%

rename from docs/src/client/install.md

rename to docs/src/archive/client/install.md

diff --git a/docs/src/client/settings.md b/docs/src/archive/client/settings.md

similarity index 100%

rename from docs/src/client/settings.md

rename to docs/src/archive/client/settings.md

diff --git a/docs/src/compute/autopopulate2.0-spec.md b/docs/src/archive/compute/autopopulate2.0-spec.md

similarity index 100%

rename from docs/src/compute/autopopulate2.0-spec.md

rename to docs/src/archive/compute/autopopulate2.0-spec.md

diff --git a/docs/src/compute/distributed.md b/docs/src/archive/compute/distributed.md

similarity index 100%

rename from docs/src/compute/distributed.md

rename to docs/src/archive/compute/distributed.md

diff --git a/docs/src/compute/key-source.md b/docs/src/archive/compute/key-source.md

similarity index 100%

rename from docs/src/compute/key-source.md

rename to docs/src/archive/compute/key-source.md

diff --git a/docs/src/compute/make.md b/docs/src/archive/compute/make.md

similarity index 100%

rename from docs/src/compute/make.md

rename to docs/src/archive/compute/make.md

diff --git a/docs/src/compute/populate.md b/docs/src/archive/compute/populate.md

similarity index 100%

rename from docs/src/compute/populate.md

rename to docs/src/archive/compute/populate.md

diff --git a/docs/src/concepts/data-model.md b/docs/src/archive/concepts/data-model.md

similarity index 100%

rename from docs/src/concepts/data-model.md

rename to docs/src/archive/concepts/data-model.md

diff --git a/docs/src/concepts/data-pipelines.md b/docs/src/archive/concepts/data-pipelines.md

similarity index 100%

rename from docs/src/concepts/data-pipelines.md

rename to docs/src/archive/concepts/data-pipelines.md

diff --git a/docs/src/concepts/principles.md b/docs/src/archive/concepts/principles.md

similarity index 100%

rename from docs/src/concepts/principles.md

rename to docs/src/archive/concepts/principles.md

diff --git a/docs/src/concepts/teamwork.md b/docs/src/archive/concepts/teamwork.md

similarity index 100%

rename from docs/src/concepts/teamwork.md

rename to docs/src/archive/concepts/teamwork.md

diff --git a/docs/src/concepts/terminology.md b/docs/src/archive/concepts/terminology.md

similarity index 100%

rename from docs/src/concepts/terminology.md

rename to docs/src/archive/concepts/terminology.md

diff --git a/docs/src/design/alter.md b/docs/src/archive/design/alter.md

similarity index 100%

rename from docs/src/design/alter.md

rename to docs/src/archive/design/alter.md

diff --git a/docs/src/design/diagrams.md b/docs/src/archive/design/diagrams.md

similarity index 100%

rename from docs/src/design/diagrams.md

rename to docs/src/archive/design/diagrams.md

diff --git a/docs/src/design/drop.md b/docs/src/archive/design/drop.md

similarity index 100%

rename from docs/src/design/drop.md

rename to docs/src/archive/design/drop.md

diff --git a/docs/src/design/fetch-api-2.0-spec.md b/docs/src/archive/design/fetch-api-2.0-spec.md

similarity index 100%

rename from docs/src/design/fetch-api-2.0-spec.md

rename to docs/src/archive/design/fetch-api-2.0-spec.md

diff --git a/docs/src/design/hidden-job-metadata-spec.md b/docs/src/archive/design/hidden-job-metadata-spec.md

similarity index 100%

rename from docs/src/design/hidden-job-metadata-spec.md

rename to docs/src/archive/design/hidden-job-metadata-spec.md

diff --git a/docs/src/design/integrity.md b/docs/src/archive/design/integrity.md

similarity index 100%

rename from docs/src/design/integrity.md

rename to docs/src/archive/design/integrity.md

diff --git a/docs/src/design/normalization.md b/docs/src/archive/design/normalization.md

similarity index 100%

rename from docs/src/design/normalization.md

rename to docs/src/archive/design/normalization.md

diff --git a/docs/src/design/pk-rules-spec.md b/docs/src/archive/design/pk-rules-spec.md

similarity index 100%

rename from docs/src/design/pk-rules-spec.md

rename to docs/src/archive/design/pk-rules-spec.md

diff --git a/docs/src/design/recall.md b/docs/src/archive/design/recall.md

similarity index 100%

rename from docs/src/design/recall.md

rename to docs/src/archive/design/recall.md

diff --git a/docs/src/design/schema.md b/docs/src/archive/design/schema.md

similarity index 100%

rename from docs/src/design/schema.md

rename to docs/src/archive/design/schema.md

diff --git a/docs/src/design/semantic-matching-spec.md b/docs/src/archive/design/semantic-matching-spec.md

similarity index 100%

rename from docs/src/design/semantic-matching-spec.md

rename to docs/src/archive/design/semantic-matching-spec.md

diff --git a/docs/src/design/tables/attach.md b/docs/src/archive/design/tables/attach.md

similarity index 100%

rename from docs/src/design/tables/attach.md

rename to docs/src/archive/design/tables/attach.md

diff --git a/docs/src/design/tables/attributes.md b/docs/src/archive/design/tables/attributes.md

similarity index 100%

rename from docs/src/design/tables/attributes.md

rename to docs/src/archive/design/tables/attributes.md

diff --git a/docs/src/design/tables/blobs.md b/docs/src/archive/design/tables/blobs.md

similarity index 100%

rename from docs/src/design/tables/blobs.md

rename to docs/src/archive/design/tables/blobs.md

diff --git a/docs/src/design/tables/codec-spec.md b/docs/src/archive/design/tables/codec-spec.md

similarity index 100%

rename from docs/src/design/tables/codec-spec.md

rename to docs/src/archive/design/tables/codec-spec.md

diff --git a/docs/src/design/tables/codecs.md b/docs/src/archive/design/tables/codecs.md

similarity index 100%

rename from docs/src/design/tables/codecs.md

rename to docs/src/archive/design/tables/codecs.md

diff --git a/docs/src/design/tables/declare.md b/docs/src/archive/design/tables/declare.md

similarity index 100%

rename from docs/src/design/tables/declare.md

rename to docs/src/archive/design/tables/declare.md

diff --git a/docs/src/design/tables/dependencies.md b/docs/src/archive/design/tables/dependencies.md

similarity index 100%

rename from docs/src/design/tables/dependencies.md

rename to docs/src/archive/design/tables/dependencies.md

diff --git a/docs/src/design/tables/filepath.md b/docs/src/archive/design/tables/filepath.md

similarity index 100%

rename from docs/src/design/tables/filepath.md

rename to docs/src/archive/design/tables/filepath.md

diff --git a/docs/src/design/tables/indexes.md b/docs/src/archive/design/tables/indexes.md

similarity index 100%

rename from docs/src/design/tables/indexes.md

rename to docs/src/archive/design/tables/indexes.md

diff --git a/docs/src/design/tables/lookup.md b/docs/src/archive/design/tables/lookup.md

similarity index 100%

rename from docs/src/design/tables/lookup.md

rename to docs/src/archive/design/tables/lookup.md

diff --git a/docs/src/design/tables/manual.md b/docs/src/archive/design/tables/manual.md

similarity index 100%

rename from docs/src/design/tables/manual.md

rename to docs/src/archive/design/tables/manual.md

diff --git a/docs/src/design/tables/master-part.md b/docs/src/archive/design/tables/master-part.md

similarity index 100%

rename from docs/src/design/tables/master-part.md

rename to docs/src/archive/design/tables/master-part.md

diff --git a/docs/src/design/tables/object.md b/docs/src/archive/design/tables/object.md

similarity index 100%

rename from docs/src/design/tables/object.md

rename to docs/src/archive/design/tables/object.md

diff --git a/docs/src/design/tables/primary.md b/docs/src/archive/design/tables/primary.md

similarity index 100%

rename from docs/src/design/tables/primary.md

rename to docs/src/archive/design/tables/primary.md

diff --git a/docs/src/design/tables/storage-types-spec.md b/docs/src/archive/design/tables/storage-types-spec.md

similarity index 100%

rename from docs/src/design/tables/storage-types-spec.md

rename to docs/src/archive/design/tables/storage-types-spec.md

diff --git a/docs/src/design/tables/tiers.md b/docs/src/archive/design/tables/tiers.md

similarity index 100%

rename from docs/src/design/tables/tiers.md

rename to docs/src/archive/design/tables/tiers.md

diff --git a/docs/src/faq.md b/docs/src/archive/faq.md

similarity index 100%

rename from docs/src/faq.md

rename to docs/src/archive/faq.md

diff --git a/docs/src/images/StudentTable.png b/docs/src/archive/images/StudentTable.png

similarity index 100%

rename from docs/src/images/StudentTable.png

rename to docs/src/archive/images/StudentTable.png

diff --git a/docs/src/images/added-example-ERD.svg b/docs/src/archive/images/added-example-ERD.svg

similarity index 100%

rename from docs/src/images/added-example-ERD.svg

rename to docs/src/archive/images/added-example-ERD.svg

diff --git a/docs/src/images/data-engineering.png b/docs/src/archive/images/data-engineering.png

similarity index 100%

rename from docs/src/images/data-engineering.png

rename to docs/src/archive/images/data-engineering.png

diff --git a/docs/src/images/data-science-after.png b/docs/src/archive/images/data-science-after.png

similarity index 100%

rename from docs/src/images/data-science-after.png

rename to docs/src/archive/images/data-science-after.png

diff --git a/docs/src/images/data-science-before.png b/docs/src/archive/images/data-science-before.png

similarity index 100%

rename from docs/src/images/data-science-before.png

rename to docs/src/archive/images/data-science-before.png

diff --git a/docs/src/images/diff-example1.png b/docs/src/archive/images/diff-example1.png

similarity index 100%

rename from docs/src/images/diff-example1.png

rename to docs/src/archive/images/diff-example1.png

diff --git a/docs/src/images/diff-example2.png b/docs/src/archive/images/diff-example2.png

similarity index 100%

rename from docs/src/images/diff-example2.png

rename to docs/src/archive/images/diff-example2.png

diff --git a/docs/src/images/diff-example3.png b/docs/src/archive/images/diff-example3.png

similarity index 100%

rename from docs/src/images/diff-example3.png

rename to docs/src/archive/images/diff-example3.png

diff --git a/docs/src/images/dimitri-ERD.svg b/docs/src/archive/images/dimitri-ERD.svg

similarity index 100%

rename from docs/src/images/dimitri-ERD.svg

rename to docs/src/archive/images/dimitri-ERD.svg

diff --git a/docs/src/images/doc_1-1.png b/docs/src/archive/images/doc_1-1.png

similarity index 100%

rename from docs/src/images/doc_1-1.png

rename to docs/src/archive/images/doc_1-1.png

diff --git a/docs/src/images/doc_1-many.png b/docs/src/archive/images/doc_1-many.png

similarity index 100%

rename from docs/src/images/doc_1-many.png

rename to docs/src/archive/images/doc_1-many.png

diff --git a/docs/src/images/doc_many-1.png b/docs/src/archive/images/doc_many-1.png

similarity index 100%

rename from docs/src/images/doc_many-1.png

rename to docs/src/archive/images/doc_many-1.png

diff --git a/docs/src/images/doc_many-many.png b/docs/src/archive/images/doc_many-many.png